March 16, 2019 / 3:55 PM

Securing DNS Queries with Dotproxy

projects

dotproxy (Github) is a robust, high-performance DNS-over-TLS proxy. It is intended to sit at the edge of a private network between clients speaking plaintext DNS and remote TLS-enabled upstream server(s) across an untrusted channel. dotproxy performs TLS origination on egress but listens for plaintext DNS on ingress, allowing it to be completely transparent to querying clients. By encrypting all outgoing DNS traffic, dotproxy protects against malicious eavesdropping and man-in-the-middle attacks.

Goals and Non-Goals

dotproxy attempts to meet the following goals:

- Exist transparently to plaintext-speaking DNS clients. In other words, clients should not observe any difference between sending queries through dotproxy's encryption layer and sending plaintext queries directly to the upstream resolver.

- Incur as little additional latency overhead as possible. Querying through dotproxy should not be significantly slower than querying the upstream directly.

- Optimize client performance through intelligent connection caching and reuse. There should exist a logic layer to preserve and manage a pool of many TCP connections to the upstream server, in order to reduce the cost of opening connections and performing TLS handshakes. This attempts to make the average query as performant as a UDP exchange without TLS.

- Provide abstractions for managing load distribution and fault tolerance among several upstream servers. A selection of load balancing policies should provide options to control request sharding using a strategy that is most appropriate for the network environment of the deployment.

- Report easily consumable and detailed metrics on application performance. Any type of non-streaming network proxy affords the opportunity to provide observability into the performance of the system. dotproxy should emit metrics on query latency, payload size, as well as low-level connection behaviors.

That said, dotproxy has some non-goals as well:

- Provide protocol-aware intelligence. In the interest of reducing proxy overhead, dotproxy (generally) does not understand the DNS protocol and thus does not attempt to parse request and response packets. Since TLS applies at layer 4, there isn't much to gain from this information anyways.

- Cache query responses. As above, without knowledge of the protocol itself, dotproxy cannot intelligently cache query responses. Every query is forwarded exactly as-is.

- Support non-TLS upstream servers. dotproxy aims to provide DNS encryption so supporting plaintext upstreams is not a goal.

Performance in Production

My deployment of dotproxy has, to date, served 0.4 million requests with an average round-trip latency of 13.7 ms. This represents, on average, 2.4 ms of proxy overhead over the average 11.3 ms RTT against my upstream resolver, Cloudflare.

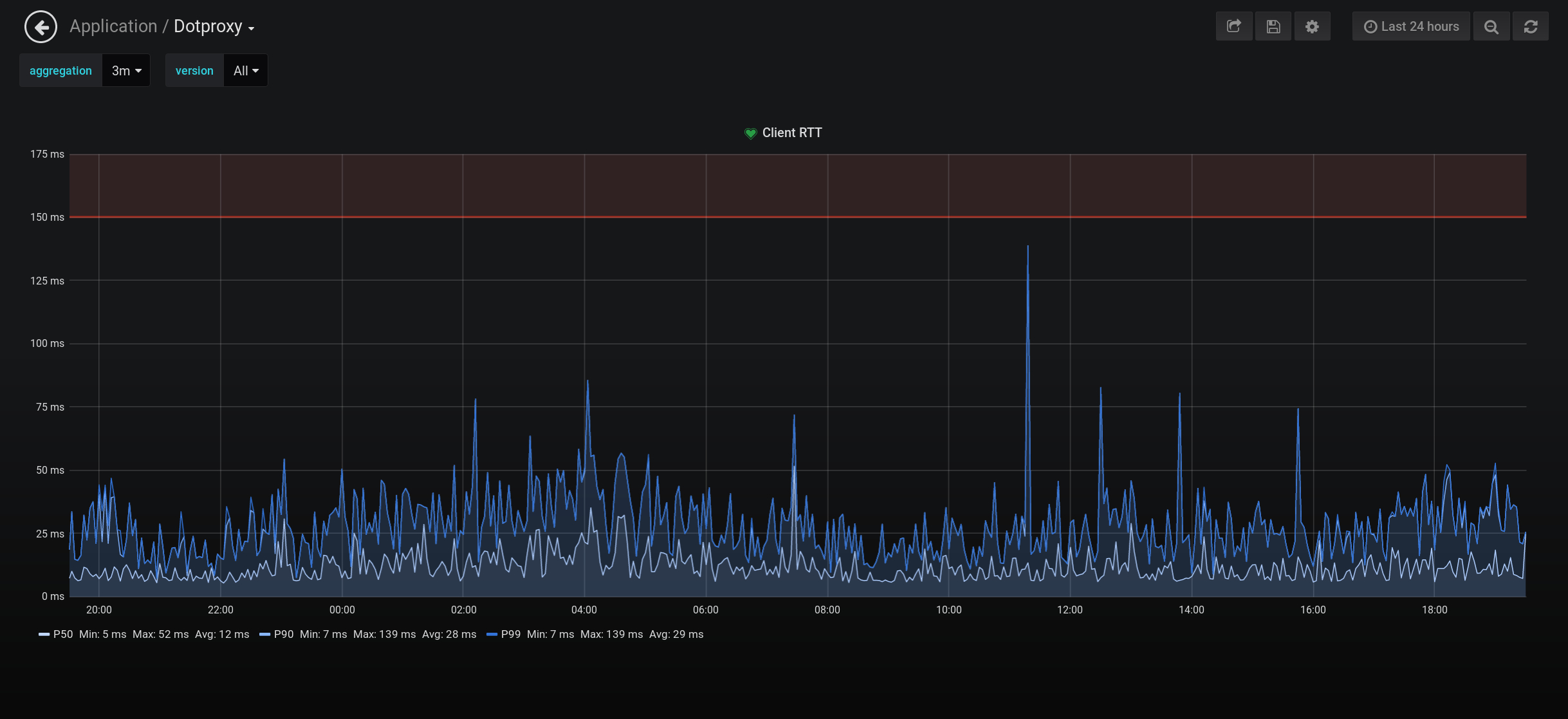

A summary dashboard I use for monitoring performance (which visualizes most metrics emitted by dotproxy):

Median client-side RTT is 11.7 ms with a P90 of 30.2 ms and P99 of 30.7 ms. The large difference is due mostly to my network's QPS pattern: dotproxy generally does not receive enough traffic to keep all connections open at all times, and performing a TLS handshake with a newly opened connection can be quite slow.

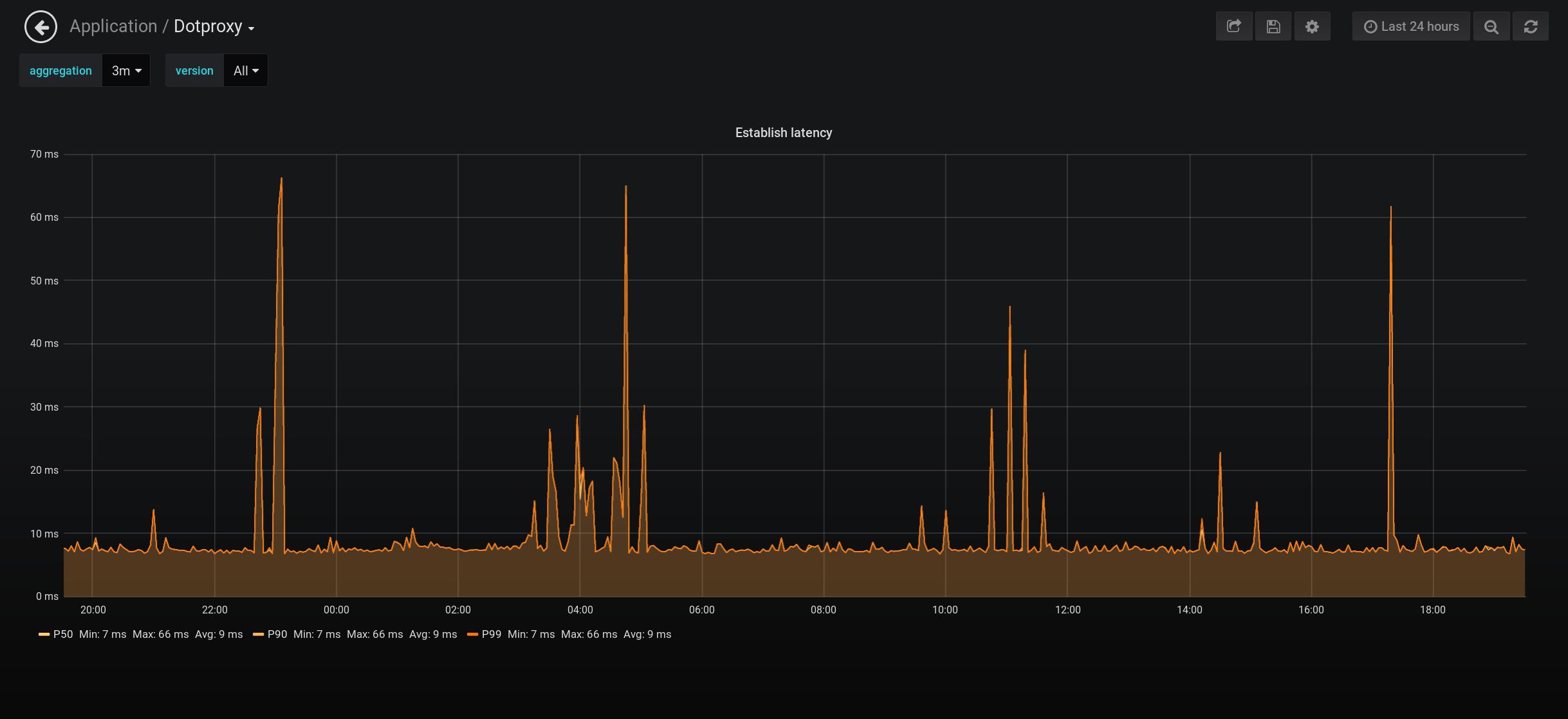

Combining use of TCP Fast Open(supported natively on Linux since kernel version 3.6) and TLS session ticket-based resumptionresults in average connection establishment latency of less than 10 ms. This represents the time required to both open the TCP connection to the upstream server and complete a TLS handshake. Random network events ocassionally cause sporadic jumps in latency, but this can be controlled by setting an aggressive TLS handshake timeout and using a load balancing policy that fails over the request to a different server.

Deployment

Download

To get started, grab a precompiled release build for your Linux architecture at the releases index. A release build is automatically built and published for most commits on master.

Alternatively, if you have any recent version of the Go toolchain handy, you can compile the project from source.

Configuration

Before deploying dotproxy, create a configuration file, optionally following the example. This affords an opportunity to fine-tune most low-level network behaviors to optimize performance for the particular network environment in which dotproxy will be deployed. Documentation for all available configuration fields is in the README.

Usage

The binary accepts two flags, --config (required) for specifying the configuration file and --verbosity (optional) for controlling output logging verbosity:

$ dotproxy --config /path/to/config.yaml --verbosity infoAny configuration or application errors will be printed to the console.

Test end-to-end functionality with kdig:

# UDP

$ kdig -p <LISTENING PORT> github.com @127.0.0.1

# TCP

$ kdig +tcp -p <LISTENING PORT> github.com @127.0.0.1You can also use Wireshark or similar to ensure that the proxied query is encrypted on egress.

The example unit fileshows how dotproxy can be daemonized and managed by systemd.

FAQ

Q: Why DNS-over-TLS and not DNS-over-HTTPS?

A: HTTP is a very heavy protocol which incurs a significant amount of additional overhead due to increased request and response payload size. Additionally, DNS-over-HTTPS traverses an awkward network abstraction boundary by leveraging an application-layer protocol for an inherently control plane-layer network operation. DNS-over-TLS maintains the original DNS protocol but simply wraps it with transport-layer encryption (which in itself is appplication protocol-agnostic).

Q: Which load balancing policy should I use?

A: The choice of load balancing policy depends heavily on the network environment of the deployment and the desired behavior in the event of upstream errors. For example, if it is most important that all upstream servers share an equal distribution of load, the HistoricalConnections policy may be the most appropriate. For latency-critical deployments in a network where all upstream servers are known to be fast (for example, an intra-network upstream), the Failover policy combined with aggressive I/O timeouts may be ideal (which effectively considers a server to have failed if it is too slow to respond, and tries the next one down the line). Alternatively, the Availability policy provides the most robust fault tolerance for servers whose behaviors are unpredictable (e.g. they are several network hops away and maintained by another entity).

Q: Are other platforms supported?

A: To date, dotproxy has only been tested on Linux, and precompiled binaries are only published for Linux architectures. That said, you can build the project from source yourself on your platform of choice.